dwelling > Gaming News

Roblox is improving its safety equipment cadence for kids ’ chat by using AI . This decision comes as the platform line up to a farm audience , include older users . There are trillion of chat subject matter sent each 24-hour interval and a wide range of exploiter - created mental object . So , Roblox face a real challenge in moderating the platform to guarantee a safe and friendly environment for actor .

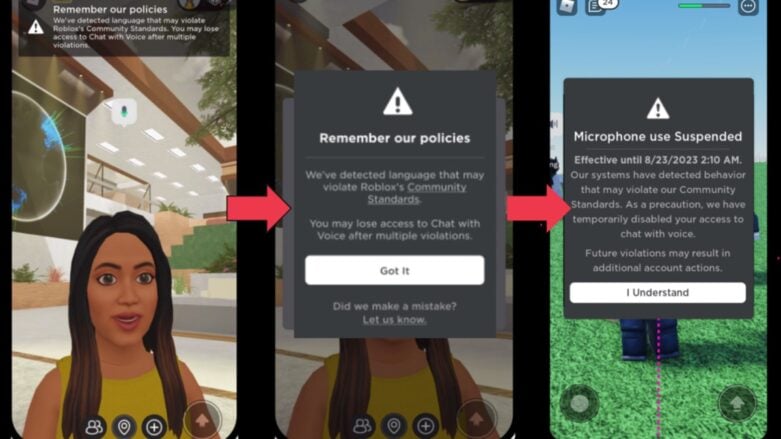

Roblox ’s safety approachis centered ona combination of artificial intelligence operation and human cognition . The companionship ’s school text filter , refined for Roblox - specific terminology , can now identify insurance - outrage mental object in all brook language . This is particularly important count the recent launch of material - time AI confab translation . to boot , an original in - star sign system curb articulation communication in real - time , flagging inappropriate manner of speaking within arcsecond . To complement this , real - time notifications inform users when their speech approaches violate Roblox ’s guidelines , decoct insult reports by over 50 % .

Roblox carefully moderate visual content using estimator vision applied science to evaluate user - create avatar , accessories , and 3-D models . item can be automatically approve , droop for human review , or refuse when necessary . This process also examines the individual parts of a model to prevent potentially harmful combination from being overlooked .

This is just one of the many things Roblox has done . They ’ve supported abill in Californiafor youngster safety , which is a big deal . However , even with the high temperature onthe earnings terms , they do n’t think they ’re exploiting children with their pay methods .

The party is create multimodal AI system of rules that are trained on various kinds of data point to better accuracy . This technology helps Roblox identify how unlike content element could lead to harmful berth that a unimodal AI might not catch . Essentially , it is a bunch of AIs working together rather of a single AI expected to handle everything . For example , an innocent - looking incarnation could cause issues when couple with a harassing text message . A good example is when a Netflix logo is used as a profile image for a TikTok user , and then the six - letter username is a ban word that set forth with N but does n’t use an N ( because the visibility figure does that for them ) .

While using mechanization is significant for deal a large amount of temperance oeuvre , Roblox stresses the ongoing need for human affaire . moderator line up AI exemplar , deal with complicated situations , and guarantee overall precision . Specifically for serious investigation , such as those related to extremism or child endangerment , specialized intimate squad take action to key and handle the unsound people .